I like Jeremiah Owyang’s matrix with the embedded point about the viral loop value, to drive engagement in advanced integration of one’s corporate site with your social media strategy.

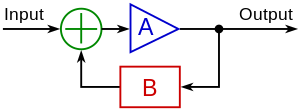

Accords with my views on how to grow online communities (where I’ve seen viral loops are a subset of feedback loops) – so using such a strategy both in the viral sense as above with with users, and in terms of establishing feedback loops with top contributors.

Any circuit-design type simulators out there which could plug into your web analytics data to allow you to test out viral campaigns?

I sketched this out a bit more on a recent slideshare: http://www.slideshare.net/stuartgh/feedback-loo…

It’s also worth reading the comment from Bert DuMars on the value of using consumer generated product reviews published on one’s site as a powerful feedback loops for driving performance:

When you integrate CGP reviews into your branded website you are inviting additional conversation about your products and services. You are opening up to your consumers and allowing them to begin a conversation with you about what they like and do not like about your products.

If you are open and honest (showing both positive and negative reviews) you not only learn how to improve your products and services, you are given the opportunity to show that you care about your consumers. We have seen culture change at our Rubbermaid and Dymo brands based on CGP reviews.

We can respond faster to feedback, especially negative, and reach out to consumers to learn what went wrong. We can then adjust the product or service based on that feedback. Think of it as an ongoing, near real-time, feedback loop and a gift from your consumers.