At a glance: Apollo (NYSE: APO) is buying a majority stake in Stream Data Centers, a developer with a 4+ GW pipeline and near-term campuses in Chicago, Atlanta, and Dallas (roughly 650 MW of power capacity coming through). Translation: Apollo wants to manufacture AI-grade capacity—land + power + permits—then lock in long leases and recycle capital. That’s a different playbook from buying stabilized boxes via REITs. ApolloApollo Global Management, Inc.Barchart.com

The needles

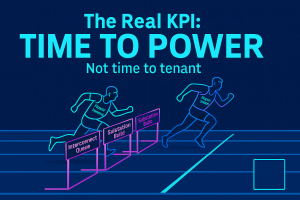

1) The bottleneck is power and permits, not tenants.

Hyperscaler demand isn’t the scarce resource; interconnects, substation timelines, and entitled land are. Developers with utility relationships and shovel-ready sites capture the biggest spread because they move from “paper megawatts” to energized capacity faster than a yield vehicle can. Apollo is effectively paying for Stream’s optionality on power across its multi-GW pipeline—precisely where the industry choke point sits. JLL expects another record year for development financing in 2025 with ~10 GW breaking ground and ~$170B of asset value needing funding; the capital is chasing power, not occupancy. JLL

2) Follow the capital stack: private markets are setting the terms.

Public REITs optimize for steady yield on stabilized assets. Developers optimize for IRR from risk steps (site control → interconnect → pre-let → NTP → COD). Apollo is dropping long-term capital into those risk steps—then can term out with IG-style, asset-backed structures once leases are signed. If you want the template for where this is going, look at Meta’s ~$29B Louisiana financing led by PIMCO (debt) and Blue Owl (equity): single-project, investment-grade scale, private credit sitting where banks used to. Expect developer + private credit pairings to proliferate. ReutersYahoo Finance

3) Why Stream, why now?

The deal formalizes the developer–finance flywheel: secure land and interconnects → pre-lease to a hyperscaler → raise cheap capital against contracted cash flows → recycle into the next campus. Stream’s specific city mix matters: Chicago, Atlanta, Dallas pair large load growth with comparatively tractable permitting and transmission plans versus ultra-constrained hubs. That positioning helps compress time-to-MW, which is the new currency of returns. Apollo’s own release underscores the scale: 4+ GW in the pipeline, “deployment of billions” into U.S. digital infrastructure. ApolloBarchart.com

4) The macro backdrop is insanely capital-hungry.

McKinsey pegs $6.7T of data-center capex needed by 2030 to keep up with compute demand, with AI-capable facilities taking the lion’s share. In that world, “own the development spread” is a rational, repeatable strategy—especially for sponsors who can bring both patient equity and structured financing to each tranche. McKinsey & Company+1

What this really signals

-

Developers become the new “growth REITs.” They’re not collecting coupons; they’re monetizing milestones. That’s where alpha sits until grid constraints ease. Datacenter Dynamics

-

Private credit is graduating to utility-scale socialization of risk. The Meta structure shows that once you have long-dated leases, you can finance a campus like a toll road or pipeline. Expect tighter spreads and more off-balance-sheet funding for hyperscalers. Reuters

-

Public-market investors aren’t shut out. If you don’t have access to Apollo-style funds, watch proxies: select data-center REITs with development pipelines, transmission and switchgear vendors, and utilities accelerating capex plans in Stream-style markets. JLL’s build forecasts give a practical map for where financing will actually clear. JLL

Risks to the bull case (and how to track them)

-

Overbuild / efficiency shock: If AI inference efficiency jumps (model compression, better GPUs), utilization assumptions could slip, pressuring lease rates. Tell: slowing pre-lets or rising concessions in developer disclosures and broker chatter. (Use JLL quarterly notes as a barometer.) JLL

-

Grid delays: Interconnect queues or local moratoria can push COD right. Tell: slippage in targeted energization dates on Chicago/Atlanta/Dallas campuses. (Stream/Apollo updates, utility IR filings.) Apollo

-

Cost of capital whiplash: If IG appetite cools or private credit reprices wider, recycle math compresses. Tell: fewer large single-asset prints after the Meta deal, or materially wider spreads on campus ABS/bonds. Reuters

Investor checklist (practical signals to watch each quarter)

-

MW under construction vs. energized at Stream-peer developers—are CODs holding? Datacenter Dynamics

-

Pre-lease coverage and escalators on new campuses (are 2–3% annual bumps sticking?). Datacenter Dynamics

-

Financing prints: any follow-ons to Meta’s IG-style package (size, tenor, spread). Reuters

-

Utility capex plans in IL/GA/TX that unlock substation timelines (proxy for Stream’s city mix). JLL

-

Sponsor behavior: Does Apollo recycle quickly (refi/partial sale) or hold longer? That will telegraph how rich the development spread remains. Apollo

Bottom line: Apollo didn’t just buy data centers; it bought time-to-power and the right to repeatedly monetize the development curve. In an AI buildout that needs trillions and is starved for interconnects, the developer is the fulcrum. If you’re a public-market investor, shadow that play by tracking power-rich markets, development-heavy REITs, and the cadence of private credit deals that term out risk at scale. McKinsey & CompanyJLLReutersApollo