AI: A Bubble, But Not a Bubble (written with help from ChatGPT-5)

Or: Why the “bubble” narrative around AI misses something deeper

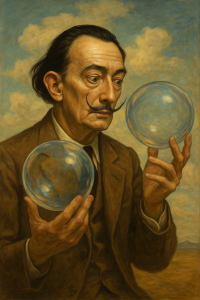

The question keeps coming up: is artificial intelligence (AI) currently in a speculative bubble that’s destined to burst, or is this something more enduring — a transformational wave disguised in bubble clothing?

Let’s unpack the paradox: on one hand, many of the classic hallmarks of a bubble are present. On the other, there are structural, strategic and systemic factors that suggest this may be a bubble that isn’t just a bubble. Below is a needle-scan breakdown of key indicators.

1. Bubble-Warning Signals

These are the red flags: the parts of the story that say: yes, this does look like a bubble.

- Hype vs returns: Global investment into AI startups exceeded $50 billion in 2023, yet very few of these companies are profitable or generating recurring revenue (BuiltIn).

- Valuations stretching history: Nvidia’s market cap crossed $2 trillion in 2024 — the fastest growth in tech history — stoking concerns that AI-driven valuation multiples are disconnected from current fundamentals.

- Warnings from the top: OpenAI CEO Sam Altman himself said in 2024, “Yes, it’s a bubble… and that’s OK.”

- Concentration & fragility: As of mid-2025, the top five AI companies (Nvidia, Microsoft, Alphabet, Meta, and Amazon) control over 85% of the global AI compute infrastructure.

- Speculative patterning: Startups with no product and mere “AI wrappers” around ChatGPT are raising millions in pre-seed funding, echoing dot-com era exuberance.

- “AI to the rescue? Not so fast. SVB cleverly asks in the title of this third chart, “Chat, What’s Another Word for Bubble?”

2. But It’s Not Just a Bubble

Now the other side: what suggests AI is more than froth and fear.

- Infrastructure build-out: $200B+ projected spending on AI data centers between 2024-2027 (McKinsey). These aren’t ephemeral assets; they’re physical, long-term capital investments.

- Government policy shifts: The EU, U.S., China, and UAE have all declared national AI strategies. The UK launched “Frontier AI Taskforce” with a £100M fund. These are state-level stakes.

- Societal adoption: ChatGPT reached 100 million users in two months — the fastest adoption of any consumer app in history. It’s now integrated into Office365, Shopify, Duolingo, and dozens of platforms.

- Cross-system integration: AI is now used in logistics, drug discovery, customer service, legal contracts, climate modeling, and more. It’s not one vertical; it’s multi-sectoral.

- Decentralised movement: Projects like SingularityNET, Bittensor, and Fetch.ai aim to provide counterweights to centralized AI monopolies. Though small in market cap, their ideology is sticky and increasingly resonant.

- Talent pipeline: Top universities report record-breaking enrolment in machine learning & data science tracks. MIT saw a 73% increase in AI-related thesis topics from 2021 to 2024.

- Powell says that, unlike the dotcom boom, AI spending isn’t a bubble: ‘I won’t go into particular names, but they actually have earnings’.

3. Why This Matters

Because how one interprets this moment drives strategy.

- Treating AI as just a bubble? You risk ignoring long-term infrastructure and missing the strategic layer.

- Treating AI as only hype-free? You risk capital misallocation and being blindsided by volatility.

Instead, the correct lens may be dual-layered: short-term froth, long-term wave.

4. Key Signals That It’s Not Just a Bubble

Here are seven indicators that suggest AI is here for the long haul:

- $200B+ in AI infra spend (2024-2027) — Source: McKinsey

- 40+ nations with national AI plans — Source: OECD AI Policy Observatory

- 100M+ users for ChatGPT within 60 days of launch

- AI cited in 60%+ of S&P 500 earnings calls in 2024 (Goldman Sachs)

- 5,000+ AI-related job listings on LinkedIn UK in July 2025 alone

- AI + Crypto projects growing: Over $4.3B market cap in AI-token sector (CoinGecko, Q2 2025)

- Cross-sector resilience: AI use cases now span healthcare, finance, media, law, education, and urban planning

5. Strategy for Navigators

If you’re an investor, policymaker, or DeSci founder:

- Use caution: Recognize speculative behaviour where it exists.

- Track fundamentals: Focus on infrastructure, partnerships, developer traction.

- Scan for decentralisation: Keep eyes on AI x Web3 convergence.

- Measure what matters: User adoption, SDK integrations, compute dependencies, data partnerships.

- Diversify bets: Don’t just follow LLMs and chips — track edge AI, tokenised compute, AI governance tools.

6. Final Thought

Yes — there is a bubble vibe to AI right now. The hype is real, the valuations are stretched, and not all will survive.

But it’s not just a bubble.

It’s a complex, layered, evolving ecosystem with speculative peaks but deep structural roots. Infrastructure, strategy, adoption and decentralisation all suggest this is not a passing moment. The wave has momentum.

“It may wobble, it may correct, it may reshape — but the foundations are being laid for a long-term wave, not just a feast followed by famine.”